Grateful for AI, Thankful for Governance

Two things can be true at the same time. AI can transform how you work, while also requiring the proper governance to ensure accurate outputs. As I highlighted in Volume 2, I’m constantly working with AI tools to close knowledge gaps and build code to customize the experience on MindOverMoney.ai. This process keeps getting more efficient as the intelligence of large language models expands. But that doesn’t mean you can let AI write code, solve problems, generate answers, and help you work without governance in place. I want to walk you through my latest challenge and how AI saved the day, but only with my oversight.

The Problem:

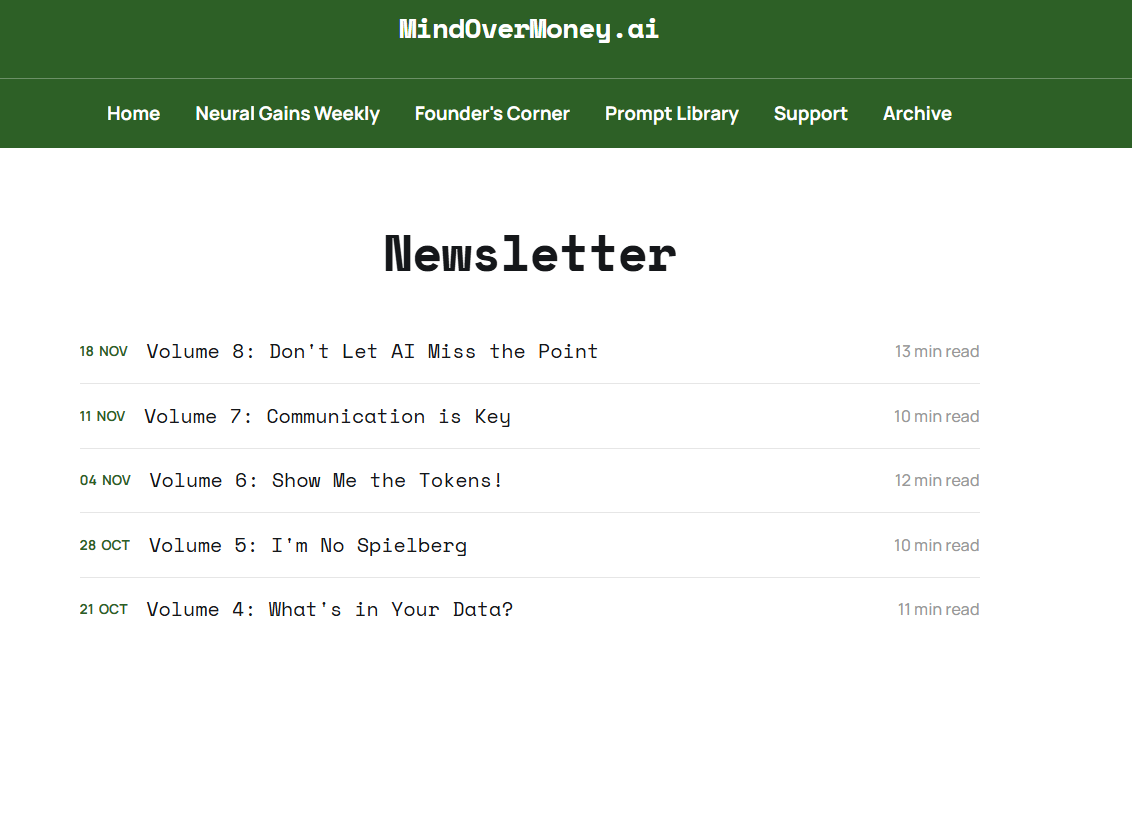

I recently ran into a critical bug on the website. The "Infinite Scroll" feature, which automatically loads older posts as you scroll down, was completely broken on the Archive, Founder’s Corner, and Prompt Library pages (visual below). If you scrolled to the bottom, nothing happened. The older content was stranded, inaccessible to anyone visiting the site. Not a great look as new subscribers who wanted to start at the beginning tried to access these links. As I’ve mentioned before, I am not a coder. I rely on my AI partner (Gemini, in this case) to act as my lead developer. So, I did what I always do: I explained the problem, pasted my code, and asked for a fix.

The Process:

Gemini 2.5 Pro with Thinking is a great model, and it went to work analyzing my current code in GitHub to look for a solution. I find it fascinating to read the model’s ‘thinking’ process as it scours thousands of lines of code in a matter of seconds. AI’s ability to read and write code seems like magic and highlights the power of this technology. Gemini confidently identified the issue and generated a fix for me to deploy. I did my part, but the issue remained unsolved. This pattern continued on for about one hour, leading to approximately 15 code changes. The last change fixed the “infinite scrolling” issue, but broke three other elements of my site. AI is powerful, but the human element was needed to get this project back on track.

I stopped the deployment efforts and reset the discovery process in the chat. It was time to debug the AI itself. I went through each element of the website that was broken and gave direct instructions to my AI coding assistant. Specifically, I had the AI navigate to the website and experience the breaks as if they were a new visitor. I shared the live source code to compare it to the GitHub code. I instructed the AI to research online forums and YouTube to find the correct solution before writing the final code change. After 30 minutes of research and dialogue, we finally found the exact root cause. Gemini was writing perfect code for a source file (main.js), but my live website was running on a compiled version (main.min.js) that wasn't being updated by my deployment process. The AI was solving the right problem in the wrong room. It took collaboration and strict oversight from me to deploy a solution that fully fixed the original issue without breaking other aspects of the site.

The Lesson:

This experience reinforced a massive lesson that applies to anyone using AI: You cannot abdicate responsibility.

We often treat these models like magic boxes that have all the answers. We assume that because they can write code or summarize text, they understand the full context of our business or environment. They don't. In fact, research backs up exactly what I experienced. A recent study presented at the Computer-Human Interaction Conference found that 52% of ChatGPT’s programming answers contain misinformation. Even more telling, users preferred those incorrect answers 35% of the time simply because they were polite and comprehensive. That is exactly the trap I fell into: the AI was confident, so I didn’t probe to ensure the right fix was being deployed.

I treated Gemini like a senior engineer who knows everything, and broke my homepage in the process. But when I started treating it like a junior developer, talented but inexperienced and needing its work checked, I found the right solution. This aligns with what experts are seeing across the industry. The 2025 Stack Overflow Developer Survey reported that the biggest frustration for 66% of developers is dealing with AI solutions that are "almost right, but not quite." That "almost right" code is dangerous because it creates what experts are now calling "AI Debt", the hidden cost of cleaning up hasty AI code later.

Governance isn't just a corporate buzzword. For us "AI Doers," governance is the discipline of pausing and asking, "Does this actually make sense?" before we hit enter. You have to audit the output. You have to verify the logic. You have to be the one to say "Stop" when the solution looks risky. AI can write the code and create amazing outputs, but you still have to be the one to steer the ship. At least for now…