Volume 21: Build the Bridge Silicon Valley Won't

Hey everyone!

The Super Bowl gave us more than just football this year. Two of the biggest AI companies turned their ad slots into a public feud while Americans watched, confused and increasingly cynical. This week, we're talking about what that disconnect costs all of us trying to learn this technology.

🧭 Founder's Corner: Why the OpenAI-Anthropic Super Bowl spat is killing trust in AI, and how we build the bridge Silicon Valley won't.

🧠 AI Education: The RAG pipeline explained—how your files turn into the exact few lines the model needs to answer your question.

✅ 10-Minute Win: Turn a messy 3-minute voice memo into a clean "Do Now vs. Schedule Later" plan using Gemini.

Let's dive in.

Missed a previous newsletter? No worries, you can find them on the Archive page. Don’t forget to check out the Prompt Library, where I give you templates to use in your AI journey.

Signals Over Noise

We scan the noise so you don’t have to — top 5 stories to keep you sharp

1) Testing ads in ChatGPT

Summary: OpenAI began testing clearly labeled “sponsored” ads inside ChatGPT in the U.S. for logged-in adult users on the Free and Go tiers. OpenAI says ads won’t influence answers and advertisers won’t see your chats.

Why it matters: This changes the “business model” story for consumer AI—ads can reshape what gets built, what gets prioritized, and how much users trust the product.

2) AI Super Bowl commercials: All the spots from Anthropic, OpenAI, Amazon, Google, Meta, and others

Summary: A big chunk of Super Bowl LX ads either promoted AI products directly or used AI in their creation—Fast Company points to iSpot’s count that it was nearly a quarter of all commercials. The piece rounds up the major AI-themed spots and what they were trying to communicate.

Why it matters: AI is now mainstream marketing, not niche tech—meaning public perception, adoption, and backlash will be shaped as much by ads and consumer messaging as by model quality.

3) Microsoft confirms plan to ditch OpenAI — as the ChatGPT firm continues to beg Big Tech for cash

Summary: Microsoft AI lead Mustafa Suleyman suggests Microsoft intends to reduce reliance on OpenAI over time, according to Windows Central. The article frames this around the strategic and financial tension of depending on a key partner for core AI capabilities.

Why it matters: If Microsoft shifts away from OpenAI, it could change what powers Copilot experiences across Windows/Office—and it signals a broader trend: big tech wants more control over its AI stack.

4) Anthropic safety researcher quits, warning ‘world is in peril’

Summary: A safety researcher at Anthropic, Mrinank Sharma, resigned publicly, warning the “world is in peril” and pointing to risk pressures (including bioterrorism concerns) tied to advanced AI. Semafor also notes ongoing debate about whether AI progress is moving too fast and what regulation should look like.

Why it matters: Safety isn’t abstract anymore—high-profile departures like this shape how companies are pressured (internally and externally) to slow down, add guardrails, or accept oversight.

5) DeSantis and Florida's House Differ on AI Legislation

Summary: Florida’s governor is pushing AI-related proposals (including an “AI Bill of Rights” and rules tied to the resource impact of large data centers), while Florida’s House hasn’t advanced parallel measures yet. The story highlights state-level AI regulation colliding with federal pressure for a more unified national approach.

Why it matters: The AI rulebook is being written in real time—state policy fights can quickly affect data centers, consumer protections, and what companies are allowed (or forced) to ship.

Founder's Corner

Silicon Valley Needs a Reality Check: How Bad Sentiment Is Killing Progress

Silicon Valley is building the future in a bubble. And that bubble is creating a dangerous gap between the people building AI and the people who actually need to use it.

I've never worked in Silicon Valley, but the disconnect isn't a perception anymore. It's a reality. Every decision made by the big AI labs gets scrutinized under a microscope. The stock market swings on capex spending reports. The media frames every layoff as "AI took their job." Social media spirals into hysteria about AI agents creating their own platforms. Meanwhile, normal people are just trying to figure out if they should even care about any of this.

If AI is going to change the world, you'd think the companies building it would prioritize adoption and education over corporate warfare. You'd be wrong.

The Super Bowl is sacred in American culture. It's the rare event that mashes sports, entertainment, and business into one highly watched spectacle. For me, it's the last football game until fall. For others, the commercials are the main attraction. Brands pay millions for 30 seconds of ad real estate to tell their story and connect with consumers. This year, AI companies entered the Super Bowl ad war with a variety of campaigns. But one ad in particular went against the grain and potentially damaged the perception of the entire AI industry.

Anthropic released an ad campaign directly targeting OpenAI, hammering them on the advertising that will soon integrate into ChatGPT. The ads depicted common scenarios: a person asking AI for advice, getting helpful answers, and then being interrupted mid-conversation to pitch a product. The tagline: "Ads are coming to AI. But not to Claude."

Sam Altman, CEO of OpenAI, responded immediately on X. He called the ads "clearly dishonest" and then attacked Anthropic's entire philosophy: "They want to write the rules themselves for what people can and can't use AI for. An authoritarian company won't get us there alone... That's a dark path." He accused them of serving "an expensive product to rich people" while positioning OpenAI as the company for the masses.

While these two AI giants fought in public, actual Americans were bombarded with negative headlines and rhetoric that fuel cynicism instead of progress.

Why does any of this matter? It's just a commercial. Who takes these seriously?

The data does. And it paints a harsh reality.

According to the 2026 Edelman Trust Barometer, the US has a massive trust gap when it comes to AI:

- Just 32% of Americans trust artificial intelligence – one of the lowest levels of any country. The global average is 49%. China is at 72%.

- Nearly half of Americans (49%) reject the growing use of AI, while only 17% embrace it. Compare that to China: 54% embrace, 10% reject.

- 65% of lower-income Americans believe people like them will be "left behind" rather than benefit from generative AI.

- 70% of Americans believe CEOs are not being fully honest about how AI will impact jobs.

Read those numbers again. This isn't a marketing problem. This is a crisis of trust.

Skepticism is growing, and the most powerful tech CEOs are squabbling over business strategies while completely ignoring the reality facing 99% of the population. AI advertising accounted for 23% of Super Bowl commercials this year, yet the news cycle focused almost entirely on the Anthropic-OpenAI spat.

The opportunity wasn't just missed. It was squandered. These advertisements should have built confidence in the general public, not alienated current and future users. Instead, Silicon Valley reinforced every fear people already had: that this technology is being built by people who don't understand them, don't see them, and don't care about the disruption they're causing.

The Gap Is Widening

I see this disconnect every week. Not in surveys, but in real conversations. People asking if they need to care about AI. Subscribers to Neural Gains Weekly trying to figure out where to start the learning process. Many of us are stuck between hype and fear, unsure which way to move.

The gap between what this technology can do and what people actually know about it is widening. Fast. Stunts like the Super Bowl spat don't just fail to close that gap. They accelerate it. They paint AI as a battleground for billionaires, not a tool for everyday professionals. They turn curiosity into cynicism.

We don't need two CEOs measuring whose model is better. We need someone showing why any of this matters and how it applies to our everyday lives. We need a path forward, not two companies blocking the road to score points.

We need positive momentum right now. Not because I'm cheerleading for AI, but because the alternative is a country that rejects the most significant technological shift of our generation out of fear and confusion.

Building the Bridge

So here's what I'm NOT doing. I'm not waiting for Silicon Valley to figure out how to talk to normal people. I'm not hoping the next ad campaign will magically build trust.

I'm building anyway.

Every week, I show up and share what I'm learning. I document my failures. I explain workflows in plain English. I help non-technical professionals go from "AI user" to "AI builder" one step at a time. Not because I'm smarter than anyone else, but because I'm a few steps ahead and willing to share my journey.

That's the bridge Silicon Valley isn't building. The one between their technology and our reality. Between their benchmarks and our workflows. Between their vision and our Tuesday afternoon.

If they won't build it, we will. Here's how:

Have one AI conversation with a skeptical coworker this week. Not a lecture. A conversation. Ask them what they're hearing about AI. Listen to their concerns. Then show them one thing you've built that saves you time. One workflow. One prompt. One small win. Don't sell them on the future. Show them what's possible right now.

Build one workflow and share it. Doesn't have to be fancy. A prompt that summarizes meeting notes. A way to draft emails faster. A research process that actually works. Build it. Document it. Share it on LinkedIn, in a Slack channel, with your team. Make the invisible visible.

These actions won't show up in Edelman's next survey. But they'll shift momentum in your circle. And momentum compounds.

So let them have their Super Bowl ads and their social media battles. We've got work to do.

AI Education for You

Part 3: RAG 101 — From Files to Answer

At this point you know the building blocks. The missing piece is the assembly line. How do documents turn into “the right few lines” that get placed in front of the model at the moment you ask a question?

Core lesson

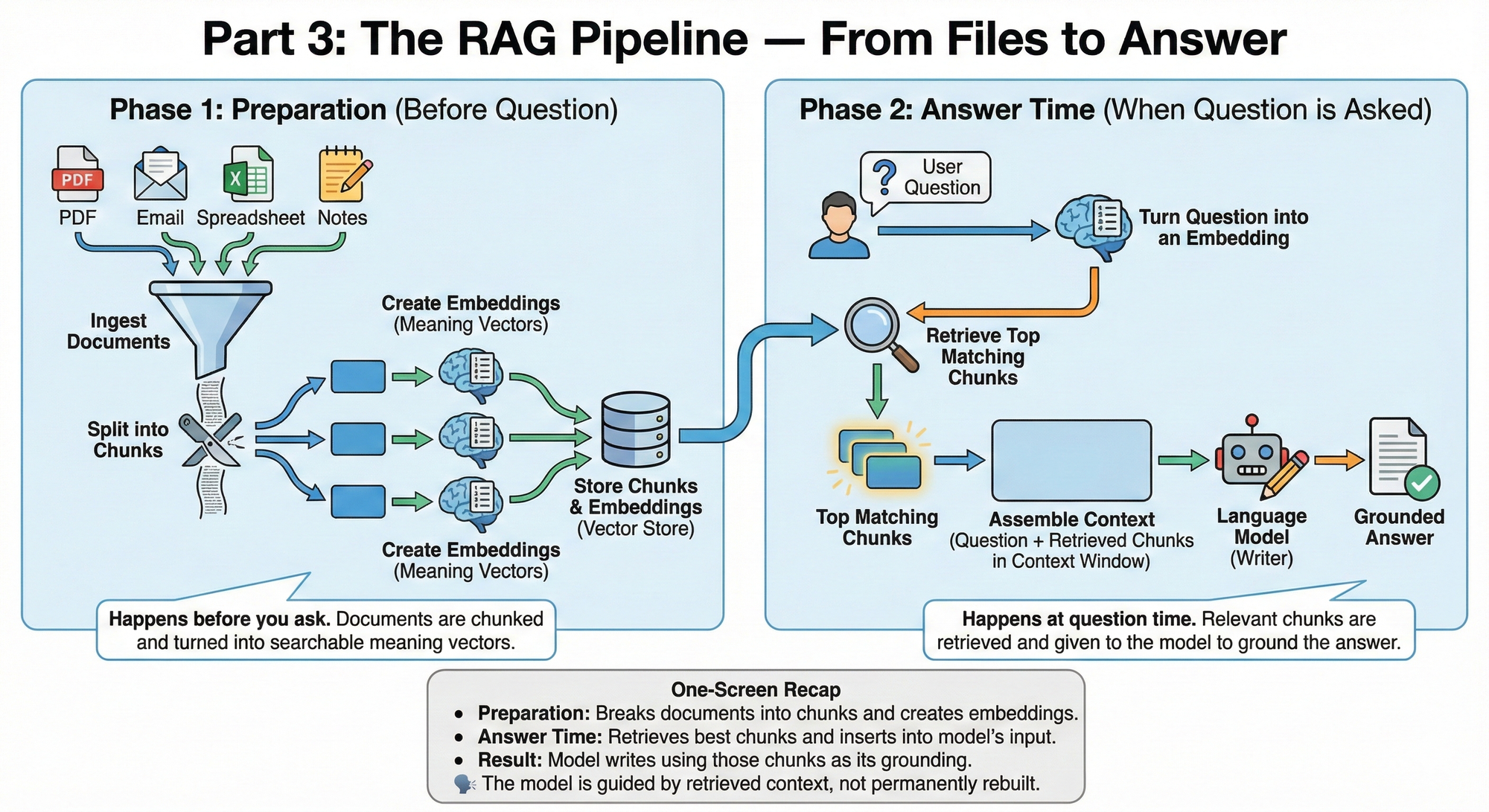

A typical retrieval-augmented generation pipeline has two phases.

Phase 1: Preparation

This happens before you ask anything.

- Ingest the documents: Bring in PDFs, emails, spreadsheets, or notes.

- Split into chunks: Break the text into smaller sections so search can grab a specific part instead of the whole document.

- Create embeddings for each chunk: Turn each chunk into a meaning vector so meaning search is possible.

- Store the chunks and embeddings: Often in a vector store, which is a system designed to search these embeddings efficiently.

Phase 2: Answer time

This happens when you ask a question.

- Turn your question into an embedding: Now the question is also in the same meaning space.

- Retrieve the top matching chunks: The system pulls the best candidates.

- Assemble the context: Those retrieved chunks are added into the model’s input so the model can “see” them.

- Generate the answer: Now the model writes, grounded in what it was given.

How this ties to earlier lessons

- Tokens explain why you cannot paste everything.

- Context windows explain why only a limited set of chunks can fit.

- Chunking explains why the system needs smaller pieces.

- Embeddings explain how meaning search can work at all.

Common confusion 1: “Does the model learn from my files permanently?” Often, no. Many systems retrieve your content at answer time rather than retraining the model. The model is being guided, not rebuilt.

Common confusion 2: “If I upload more documents, answers always improve." Not always. More documents can create noise. Quality and organization matter.

Common confusion 3: “Chunking is optional.” You can skip it, but retrieval quality usually suffers because the system cannot target the exact part you need.

Examples that land

Example 1: “What did I spend on subscriptions this month?”

Task context: You have a transaction export in your Monthly Money Pack.

Bad input: Tell me about my subscriptions.

Good input:

- Use my uploaded transaction export.

- Retrieve only the lines that look like recurring subscriptions.

- Then total them and list the merchants used for the total.

What improved and why: You made the model depend on retrieved lines, not on assumptions.

Example 2: “Where does my bill explain late fees?”

Task context: The bill is long and has many sections.

Bad input: Summarize my bill.

Good input:

- Retrieve the exact chunk that mentions late fees or penalties.

- Quote the lines.

- Then explain them in plain English.

What improved and why: Retrieval can aim at a narrow target when you specify the target.

Example 3: “Why does my budget category look wrong?”

Task context: You suspect a merchant name change or inconsistent labels.

Bad input: Fix my budget.

Good input:

- Retrieve the transactions that appear related by meaning to groceries.

- Group them.

- Then propose a cleaner category label for each group.

What improved and why: You used retrieval and grouping to reduce guesswork.

One-screen recap

- Retrieval-augmented generation has two phases: preparation and answer time.

- Preparation breaks documents into chunks and creates embeddings.

- Answer time retrieves the best chunks and inserts them into the model’s input.

- The model writes using those chunks as its grounding.

Your 10-Minute Win

A step-by-step workflow you can use immediately

🎙️The "Voice-to-Calendar" Triage

When your brain is overloaded, you don’t need “more motivation.” You need a fast way to turn mental noise into a plan. This workflow takes a messy 3-minute rant and turns it into a clean table of Do Now vs. Schedule Later—so you stop carrying everything in your head and start moving the right things forward.

The Workflow

1. Record your “brain dump” (3 Minutes)

Open your phone’s voice recorder (Voice Memos on iPhone, Recorder on Android) and record a single 3-minute stream-of-consciousness memo. Say everything that’s on your mind—errands, bills, calls, family stuff, anything.

Tip: If something has a date (“dentist next month,” “rent,” “birthday”), say it out loud. Dates are gold for scheduling.

2. Upload it to Gemini + run the triage prompt (3 Minutes)

Open the Gemini mobile app and start a new chat.

- If Gemini lets you attach files: upload the voice memo.

- If uploading isn’t available: tap the microphone and play the memo out loud near your phone (it sounds silly, but it works).

Then copy/paste this prompt:

You are my “Voice-to-Calendar Triage” assistant.

Input: A 3-minute voice memo (uploaded OR played aloud).

Goal: Convert my messy brain dump into a clean plan I can act on today.

Rules:

1) Do NOT invent tasks. Only use what you hear.

2) Combine duplicates. Rewrite tasks as clear actions that start with a verb.

3) If a task is vague, ask ONE clarifying question at the end (max 3 questions total).

4) Sort into “Do Now” vs “Schedule Later” using this logic:

- Do Now = <15 minutes OR prevents a penalty/problem OR blocks other tasks.

- Schedule Later = takes focus/time, needs a specific time block, or depends on someone else.

5) Output EXACTLY in this format:

A) Triage Table

Task | Category (Money/Health/Home/Admin/Social/Errands/Other) | Do Now or Schedule Later | Est. Time | Next Action | Suggested Date/Time Block | Deadline (if any)

B) Today’s Top 3 (Do Now)

- 1)

- 2)

- 3)

C) Schedule Later “Calendar Blocks” (pick the 5 most important)

Event Title | Duration | Suggested Day | Suggested Time Window | Prep Needed

D) Quick sanity check

- What I’m overthinking (1 line)

- What I’m avoiding (1 line)

Now process my voice memo.

3. Clean it up and catch mistakes (2 Minutes)

Skim the Triage Table and look for three common issues:

- Too many “Do Now” items: If more than 5–7 tasks land in Do Now, tell Gemini:“Move anything non-urgent into Schedule Later and keep Do Now to the 5 highest leverage tasks.”

- Made-up assumptions: If Gemini guessed details (price, dates, people), reply:“Replace assumptions with TBD and ask me one question per TBD item.”

- Bad time estimates: If a task is clearly longer than stated, correct it. Your calendar blocks depend on realism.

4. Create the asset: your “Do Now” list + Calendar Blocks (2 Minutes)

This is where you lock in the win.

- Copy B) Today’s Top 3 into your Notes app as: “Today — Top 3 (from Voice Triage)”

- Copy C) Schedule Later Calendar Blocks into Notes under: “This Week — Calendar Blocks”

- Optional (but powerful): Open your calendar and add just 1–2 of those blocks as real events (start small).

You now have a plan you can see, not a swirl you can feel.

The Payoff

In 10 minutes, you’ve turned mental clutter into a tangible asset: a Triage Table, a Today Top 3, and a short list of Calendar Blocks for the week. The stress reduction is immediate because your brain stops trying to “remember everything.” You also get a simple rule for future overload: dump → triage → schedule.

Transparency & Notes

- Tools used: Gemini mobile app (Free/freemium accessible).

- Privacy: Don’t include account numbers, addresses, or sensitive personal info in your voice memo. If you do, delete the memo and re-record.

- Limits: Free tiers can have usage limits, and file upload options may vary—if you can’t upload audio, use the microphone method and play the memo aloud.

- Educational workflow — not financial advice.

Follow us on social media and share Neural Gains Weekly with your network to help grow our community of ‘AI doers’. You can also contact me directly at admin@mindovermoney.ai or connect with me on LinkedIn.