Volume 3: Data, Data, Data!

Welcome back, everyone! The AI news cycle continues to move at warp speed, with dozens of significant announcements, product releases, and partnerships being announced over the last week. Luckily, you’re in the right place to keep up, stand out, and take advantage.

Missed a previous newsletter (released every Tuesday at 10AM EST)? No worries, you can find them on the Archive page at MindOverMoney.ai. If you have content suggestions, a workflow idea, or want to share a success from your AI journey, reach out at admin@mindovermoney.ai. And don’t forget to follow us on X and TikTok as we begin our social media journey.

Let’s dive right in with Signals Over Noise, where we highlight what matters from the last week in AI news.

Signals Over Noise

We scan the noise so you don’t have to — top 5 stories to keep you sharp

1) OpenAI teams with Broadcom to build 10GW of custom AI chips

Summary: OpenAI announced a strategic collaboration with Broadcom to design and deploy 10 gigawatts of custom AI accelerators, with rollout beginning in 2026.

Why it matters: Purpose-built hardware can lower costs and boost performance for AI apps you use (ChatGPT, Sora) — and it diversifies the supply chain beyond off-the-shelf GPUs.

2) California passes law: AI chatbots must disclose they’re AI

Summary: A new California law effective Oct 13, 2025 requires consumer chatbots to clearly state they’re AI and adds reporting rules around suicide-prevention safeguards.

Why it matters: Expect clearer labels and safer defaults across consumer AI — helpful for beginners and a signal of where national policy may head next.

3) IMF chief: Most countries lack the ethical/regulatory base for AI

Summary: IMF Managing Director Kristalina Georgieva warned that many nations are unprepared on AI regulation and ethics, highlighting risks to financial stability and inclusion.

Why it matters: Policy gaps can slow deployments and create uncertainty; watch for frameworks that unlock (or constrain) AI growth in your market.

4) Spot a Sora fake — while you still can

Summary: With OpenAI’s Sora app fueling a wave of ultra-realistic AI videos, Axios outlines simple tells and context checks to help everyday users detect fakes — and notes watermark limits.

Why it matters: A beginner-friendly media-literacy primer: knowing how to verify AI video protects you from scams, misinformation, and bad financial signals circulating on social feeds.

5) Salesforce commits $15B to San Francisco, doubling down on AI

Summary: Ahead of Dreamforce, Salesforce pledged $15B over five years to expand AI initiatives, including an incubator hub and programs to help businesses adopt AI agents.

Why it matters: Enterprise demand for agentic AI remains strong — a leading indicator for job opportunities, ecosystem tools, and where budgets are headed.

AI Education for You

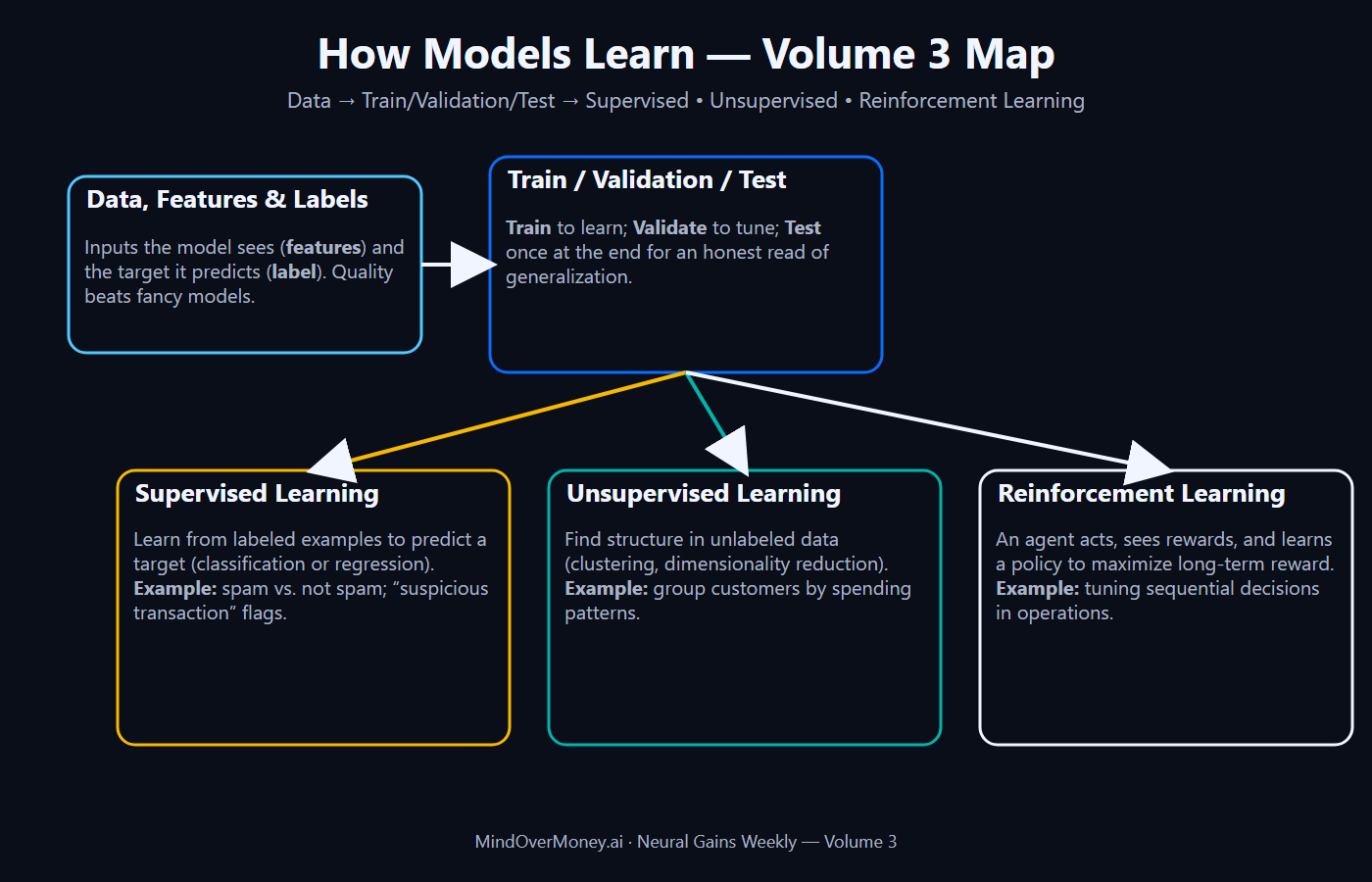

How Models Learn: Data, Splits, and Learning Types

So far, we covered the family tree—Artificial Intelligence → Machine Learning → Deep Learning—and peeked inside Neural Networks, Generative AI, and Large Language Models. Now we’ll focus on the data side of learning and illustrate the main ways models learn and train.

Data, Features & Labels:

- What it is:

- Data is your raw information (rows in a spreadsheet).

- Features are the input columns the model can look at (e.g., “amount,” “merchant,” “time of day”).

- Label is the answer column you want the model to predict (e.g., “spam vs. not spam,” “groceries vs. travel”).

- Why it matters: Clear, accurate data usually beats fancy algorithms. If your inputs are messy or your labels are wrong, the model learns the wrong lesson.

- Everyday examples: A spam filter looks at features like sender, subject words, and time sent; the label is spam/not spam.

- Personal finance tie-ins: A budgeting tool might use features such as merchant, amount, and category hints to predict the label “category” (groceries, rent, etc.).

Train / Validation / Test:

- What it is: We divide past data into three buckets:

- Train: The model learns here.

- Validation: We tune the model here (try settings, pick what works best).

- Test: We check final quality here—this data stays unseen until the end.

- Why it matters: If you judge the model on the same data it learned from, accuracy can look unrealistically high. A clean split gives an honest read.

- Everyday examples: Your phone’s photo app is tuned on a validation set, then judged on a separate test set so results aren’t biased.

- Personal finance tie-ins: If you design a rule to spot “recurring bills,” try it on 2018–2022 (train/validate), but only judge it on 2023 (test). If it works on new data, it’s useful.

Supervised Learning:

- What it is: The model learns from labeled examples: you show inputs (features) paired with the correct answers (labels), and it learns to predict the label for new cases. Two common tasks:

- Classification: pick a category (e.g., spam vs. not spam).

- Regression: predict a number (e.g., next month’s spend).

- Why it matters: This is the workhorse of real-world AI—most business problems boil down to predicting a category or a number.

- Everyday examples: Email spam filters, photo apps that recognize pets vs. objects, apps that suggest categories for receipts.

- Personal finance tie-ins: Predict whether a transaction is suspicious or normal, or whether a new merchant is “groceries” vs. “dining.”

Unsupervised Learning:

- What it is: The model looks for patterns in unlabeled data (no answer column). Two useful ideas:

- Clustering: group similar items together (e.g., shoppers with similar habits).

- Dimensionality Reduction (e.g., PCA — Principal Component Analysis): compress many columns into a smaller, more understandable view.

- Why it matters: It helps you explore data, spot groups, and find anomalies before you ever build a predictor.

- Everyday examples: Photo apps grouping similar faces; stores clustering products that get bought together.

- Personal finance tie-ins: Group your spending into natural clusters (weekday coffee vs. weekend dining) and flag outliers (an unusual spike) for review.

Reinforcement Learning:

- What it is: A learning loop where an agent takes actions in an environment and gets rewards or penalties. Over time, it learns a policy (a strategy) that earns more reward.

- Why it matters: This shines when decisions happen step-by-step and affect future steps (games, robotics, operations). It also influences how some language models are fine-tuned to be more helpful (e.g., RLHF — Reinforcement Learning from Human Feedback).

- Everyday examples: Systems that learn which content sequence keeps users engaged without overwhelming them.

- Personal finance tie-ins: A simulated plan that adjusts automatic savings amounts over time to keep a safe cash cushion (just a thought model, not advice).

Common misconceptions to head off:

- “If my accuracy looks great, I don’t need a validation/test set.”

- Reality: Without a proper split, you might be grading the model on the same data it memorized. Always keep a final test set untouched.

- “Unsupervised learning just means clustering.”

- Reality: It also includes tools like PCA (Principal Component Analysis) to simplify data and anomaly detection to catch oddballs—great prep before supervised tasks.

- “Large language models think like humans and ‘know’ facts.”

- Reality: LLMs predict likely next words. They can sound confident yet be wrong. For facts, connect them to sources and ask for quotes or citations.

Quick recap (one-liners):

- Data, Features & Labels: Inputs in, answers out—clean, accurate data wins.

- Train / Validation / Test: Train to learn, validate to tune, test once to judge fairly.

- Supervised vs. Unsupervised vs. RL:

- Supervised = learn from answers you provide.

- Unsupervised = find patterns without answers.

- RL = learn by doing, guided by rewards.

Video Overview (Bonus):

Your 10-Minute Win

A step-by-step workflow you can use immediately

🎧 Earnings Call Summarizer (Free, fast, beginner-friendly)

Why this matters: It’s officially earnings season, the perfect time to learn about companies and find your next investment opportunity. Earnings calls are dense — 60+ minutes of executive remarks and Q&A. In 10 minutes, you can grab a free transcript, feed it to a free AI, and get a crisp one-page brief with key numbers, guidance, risks, and sentiment — so you can act faster, like a pro.

Step 1 — Get the transcript (2 – 4 minutes):

Pick one of these free sources:

A) Company Investor Relations Page:

- Most public companies post the call replay and/or transcript on their IR site after the call. Look for Investors → Events/Presentations → the latest Earnings Call.

B) YouTube (official or analyst uploads):

- Open the video → click Show transcript (in the three-dot menu). Copy the text and paste it into a doc. This is a built-in free YouTube feature.

- Tip: If you only find audio/video, YouTube’s Show transcript is the fastest free path. If you find a PDF transcript on the IR site, that’s even cleaner — copy the text directly.

Step 2 — Choose your free AI helper (1 minute):

Use any of these free options to summarize your transcript:

- Gemini — Gemini’s free plan allows document uploads and summaries with usage limits.

- ChatGPT — Free ChatGPT supports long-form summaries within length limits.

- Perplexity — Perplexity Free handles moderate length inputs and supports follow-up questions.

(All three options are free at time of writing; longer files may require splitting into sections.)

Step 3 — Paste the transcript & create the one-page brief (3 minutes):

- If you were able to obtain a PDF transcript of the earnings report, attach to your AI chat.

- If not, past the transcript of the call obtained from Youtube.

Then, Enter this Prompt:

“You are my equity analyst with 30 years of reviewing, understanding and analyzing quarterly earnings reports. Summarize this earnings call into a one-page brief with:

- Company / Ticker / Quarter / Date

- Headline results: revenue, EPS (GAAP & non-GAAP if mentioned), YoY %, QoQ %, gross margin, free cash flow (exact numbers)

- Guidance: next quarter & full-year (ranges + midpoints); note any raise/cut vs prior

- Drivers & growth areas: products, segments, geographies

- Risks/Watchouts: supply, FX, pricing, competition, regulation

- Management quotes (max 2): short high-signal lines with speaker names

- Q&A sentiment: 2–3 bullets (bullish/bearish tone)

- Questions to track next quarter: 3 bullets

Rules: Use only info in the transcript. Format in sections (use bullets + bold numbers). Cite line snippets when quoting. If a metric isn’t in the text, write “not disclosed.”

Step 4 — Add quick KPI table + “So What” (2 – 3 minutes):

- Ask the AI to append a compact table and takeaway:

Follow-up Prompt:

“Add a 6-row table with: Metric | Current | Prev Q | YoY | Guide (midpoint) for Revenue, EPS, Gross Margin, FCF, key segment, and one core KPI (e.g., MAUs, ARPU). Then write 3 bullets titled ‘So What?’ (explain what a retail investor should watch for next quarter).”

If you want a file: ask “Provide the table as CSV I can paste into Google Sheets.”

The Payoff:

In about 10 minutes you have a clean one-pager and a KPI mini-table from a 60-minute call — numbers, guidance, risks, and quotes you can compare quarter-to-quarter.

💡 Pro tip: Save each summary in a folder by ticker. Over time, you’ll spot patterns in guidance and credibility faster than reading analyst recaps.

👉 Your Turn

Pick one stock you follow, grab its latest transcript, run the prompt, and reply with your top “So What?” bullet. We might feature a few next week.

Transparency & Notes for Readers:

- Transcripts: Recent calls are free on company Investor Relations pages; older ones may move behind paywalls. Start with IR or YouTube transcripts.

- AI tools cost: Gemini Free, ChatGPT Free, and Perplexity Free all support this workflow; each has length or rate limits.

- Accuracy: AI can misread numbers from noisy text — verify key figures in the original transcript.

- Compliance: Educational workflow only — not investment advice.

Founder's Corner

Real world learnings as I build, succeed, and fail

Ever have a deadline and no idea where to start? Hit a knowledge gap that stalls a project? Try a new tool, only to drown in errors with no clue how to fix them? You’re not alone. That was me in the first few weeks of building MindOverMoney.ai on Ghost, which offers solid out-of-the-box themes, but none fit what I needed. So I went down the customization rabbit hole—and I’m glad I did. It led to my second significant “aha” moment: I can write code (with ChatGPT as my co-pilot).

There were 3 customizations needed to realize my vision for the website:

- Simplified layout on the main page

- Custom layouts and navigation for ‘Founder’s Corner’ and ‘Prompt Library’

- Automation of Neural Gains Weekly into the ‘Archive’

Now, I don’t know how to read or write code, nor am I extensively familiar with IDE or coding platforms. But I read about AI coding use cases and how advancements over the past year have unlocked new potential for people like me (like many of you). The barrier to entry has been knocked down, but you can only understand the power of AI coding once you experience it. You can make real progress without being “technical,” if you work in small, manageable steps and ask better questions. These are the lessons that helped me move from stuck to building.

- Build your vision

- Know exactly what you want to build. Clear communication of your vision is key.

- Work on one task at a time

- Focus on each step individually and verify the code deployment matches your vision.

- Be precise

- When I gave ChatGPT exact errors and clear outcomes, I got useful help.

- Ask questions

- If you don’t know what to do, that’s okay. Ask for help and clarity.

- Expect friction, budget patience

- Failure and rework are part of the learning journey.

- Learn as you go

- I didn’t need to learn everything. I needed just enough knowledge to build out my vision.

- Celebrate wins

- Reinforce your learning by celebrating every win, especially when building code.

I understand this topic can be intimidating, especially if you’re like me and have little to no background in coding. But that’s the point. You can build tools, apps, websites, and passion projects without having technical skills. Even if you fail and struggle to build a finished product, you’ll start to unlock the full potential of AI and give yourself an advantage over 99% of people using these tools. We often focus on outputs and are trained to think that way, whether it be in school or work. AI is different, the learning journey is different. The power is understanding and creating something that was out of reach just a year ago. It's also incredibly fun and rewarding to see an idea come to life at warp speed. Once you start, you won’t want to stop.

Research Google AI Studio, Claude (Anthropic), and Codex (ChatGPT) to understand how others are building with these tools. Then, build something that will make your life easier and experience the power of AI.

Goals & Milestones:

In the spirit of transparency, I plan on sharing updates related to my goals. This has been a challenge since I don’t really know what to expect when it comes to subscription growth, but socializing goals will help me stay accountable throughout this journey.

Follow us on social media and share Neural Gains Weekly with your network to help grow our community of ‘AI doers’. You can also contact me directly at admin@mindovermoney.ai or connect with me on LinkedIn.