Volume 6: Show Me the Tokens!

Happy November! Time flies when you are learning AI!

This week kicks off with something new — the very first Neural Gains Weekly song, created using Suno AI. It’s a fun experiment in blending creativity and tech, and a reminder that learning AI can be as enjoyable as it is practical.

*Will open in website to play

In this issue, AI Education breaks down how models read text through tokens — the foundation behind how AI “understands” language and why shorter, cleaner inputs lead to better (and cheaper) results.

Our 10-Minute Win builds on last week’s research workflow by turning it into a living Watchlist Thesis Card System inside Notion.

And in Founder’s Corner, I share the macro trends guiding where I focus my AI learning — infrastructure, regulation, and corporate adoption.

Enjoy the song, dive into the issue, and keep experimenting.

Missed a previous newsletter? No worries, you can find them on the Archive page. Don’t forget to check out the Prompt Library, where I give you templates to use in your AI journey.

Signals Over Noise

We scan the noise so you don’t have to — top 5 stories to keep you sharp

1) OpenAI completes restructuring, refreshes Microsoft partnership

Summary: OpenAI converted into a public benefit corporation after Delaware and California AGs declined to oppose, clearing governance hurdles and aligning a refreshed Microsoft partnership.

Why it matters: This structure eases large-scale fundraising—fuel for models, chips, and data centers—while keeping a mission anchor and solidifying the Microsoft tie-up that underpins OpenAI’s scale.

2) Seizing the AI Opportunity

Summary: OpenAI lays out a near-term agenda for national AI opportunity: skills & education, industry adoption, and regional investment, paired with pragmatic policy guardrails.

Why it matters: Direct-from-source playbook for turning AI hype into productivity—useful for readers tracking where policy and capital will flow next.

3) Storage stocks rip on AI demand

Summary: Western Digital and Seagate jumped on guidance and multi-year orders linked to AI data-center buildouts, signaling stronger visibility into 2026.

Why it matters: Beyond GPUs, AI needs massive storage—a clean second-order signal that the infra boom is broadening to HDD/NAND and memory supply chains.

4) Introducing Teams Mode for Microsoft 365 Copilot

Summary: Microsoft rolls out Teams Mode—bring coworkers into your Copilot conversation for secure group AI chats directly inside Teams.

Why it matters: AI moves from solo helper to team workflow—planning, follow-ups, and decisions with everyone (and the AI) in the loop.

5) Alphabet hikes capex (again) on AI demand

Summary: Alphabet raised 2025 capital-spending plans to $91–$93B on strong ad and Cloud growth, with spend aimed heavily at AI infrastructure (TPUs, data centers).

Why it matters: Another proof point that the AI build-out is still accelerating—good read-through for suppliers (chips, storage, power) and for operators planning multi-year AI budgets.

AI Education for You

Tokens & Tokenization 101: How AI “reads” your text

Before a model can understand anything you write, it must read your text. It does not read characters or whole words. It reads tokens—small pieces of text. Tokenization is how text gets split into those pieces. Every model has a page size (called a context window): the maximum number of tokens it can read at once. If your text is longer than the page, some of it is cut off.

One more key idea: tokens drive cost. Reading and writing more tokens means more compute. More compute means higher cost. That is why long inputs, long outputs, and larger page sizes often cost more—and why higher-quality models that can handle more tokens usually carry a higher price.

Core lesson — in plain English

What is a token?

A token is a small piece of text the model can read. A token is often shorter than a word. For example, “budgeting” may be split into smaller pieces; even a space or punctuation can count as a token. Two sentences with the same number of words can have different token counts.

What is tokenization?

Tokenization is the splitting of your text into tokens before the model sees it. The model only learns patterns over tokens, not raw text.

What is the page size (context window)?

It is the maximum number of tokens the model can read at once. Think of it like a single page. Your tokens fill the page from top to bottom. When the page is full, extra tokens fall off.

What gets cut when it is too long?

Anything that does not fit on the page is ignored. That can hide important fees in a long bank statement, an old detail in a bill email chain, or the key fact buried at the bottom of a receipt.

Why fewer, clearer tokens help:

- Short, focused text fits the page.

- The model can place attention on what matters.

- You save compute and money because fewer tokens are processed.

How tokens tie to cost:

- More tokens in: more to read → more compute.

- More tokens out: longer answers → more compute.

- Bigger page size: the model can read more at once → more memory and compute behind the scenes → usually higher price.

- Stronger models: better understanding often comes with larger page sizes and heavier computation, which is why paid tiers often unlock better models and longer inputs.

Contrast & clarity — common confusion:

- “Tokens are words.” Not quite. A token is often smaller than a word. A short word might be one token; a longer word can be split into several.

- “If I paste everything, it will be smarter.” Not always. Very long text can dilute what matters or overflow the page so key parts get cut.

- “If it missed a detail, the model failed.” Sometimes yes. Often the detail did not fit or was buried under less useful text.

- “Cost is only about how long the answer is.” Cost depends on both the tokens you send and the tokens you ask it to write.

Examples that land:

1) Long bank statement vs. what fits

- You paste a full monthly statement (many pages).

- The page fills before a late fee near the end.

- The model summarizes spending but misses the fee because it never saw it.

2) Bill email chain with long quotes

- A bill support thread includes repeated quotes of earlier emails.

- The repeats eat tokens and push out the original agreement details.

- Result: the model cannot confirm the promised credit because that part was cut off.

3) Budget note: short summary vs. raw rows

- Option A: paste 200 raw transaction lines.

- Option B: write a short, labeled summary (“groceries up $120 due to party; restaurants down $75; two new ride charges”).

- Option B fits, costs less, and gives a clearer answer.

Quick recap:

- Tokens are the small pieces of text a model reads.

- Tokenization splits your text into those pieces.

- The page size is how many tokens fit at once; overflow is cut off.

- More tokens → more compute → more cost.

- Keep inputs short, focused, and labeled to fit the page and improve results.

Your 10-Minute Win

A step-by-step workflow you can use immediately

🗂️ Watchlist Thesis Card System

Last week, we built your AI Stock Research Assistant — a fast, Perplexity-powered way to pull verified news and filings into a clean research brief.

This week, we take the next step: turning that brief into a living thesis system that keeps your ideas organized, testable, and accountable.

Because collecting facts is easy — turning those facts into repeatable conviction is the real edge. This workflow will show you how to convert one Perplexity brief into a reusable Notion Watchlist Thesis Card you can update every quarter to see how your thinking evolves.

Step 1 — Import your research brief (2 minutes)

- Open your Volume 5 “Stock Research Assistant” brief for a ticker you follow.

- If you didn’t experiment last week, access the workflow from Volume 5 here.

- Copy the brief (business snapshot, catalysts, risks, sources).

- Paste it into ChatGPT (Free) with this transform prompt:

Transform this sourced brief into my Watchlist Thesis Card fields. Fields:

• One-sentence Thesis (your concise “why this could work”) • Variant Perception (how your view differs from consensus) • Time Horizon (e.g., 6–18 months) • Key KPIs to watch (ticker-specific; exact definitions/units) • Bullish Triggers (3 if/then statements that increase conviction) • Bearish Triggers (3 if/then statements that decrease conviction) • Top Catalysts (next 1–2 quarters) • Top Risks • Links (IR site, latest 10-Q/10-K, latest earnings PR) Rules: Use only the text I’ve pasted and the official links; if a metric is missing, write “not disclosed.” Keep under 300 words, use bold section headers.

In seconds, you’ll have a structured thesis card built from your own research.

💡 Pro tip: Use ‘ChatGPT 5 Thinking’ and see the power of a reasoning model.

Step 2 — Save it in Notion (Desktop; 3–4 minutes)

Notion access & setup:

- Go to notion.com → Sign up Free (email or SSO).

- From the left sidebar, click “Create” → “Database → Table” to make a simple table database.

- You might have to clock the ‘down arrow’ at the top left

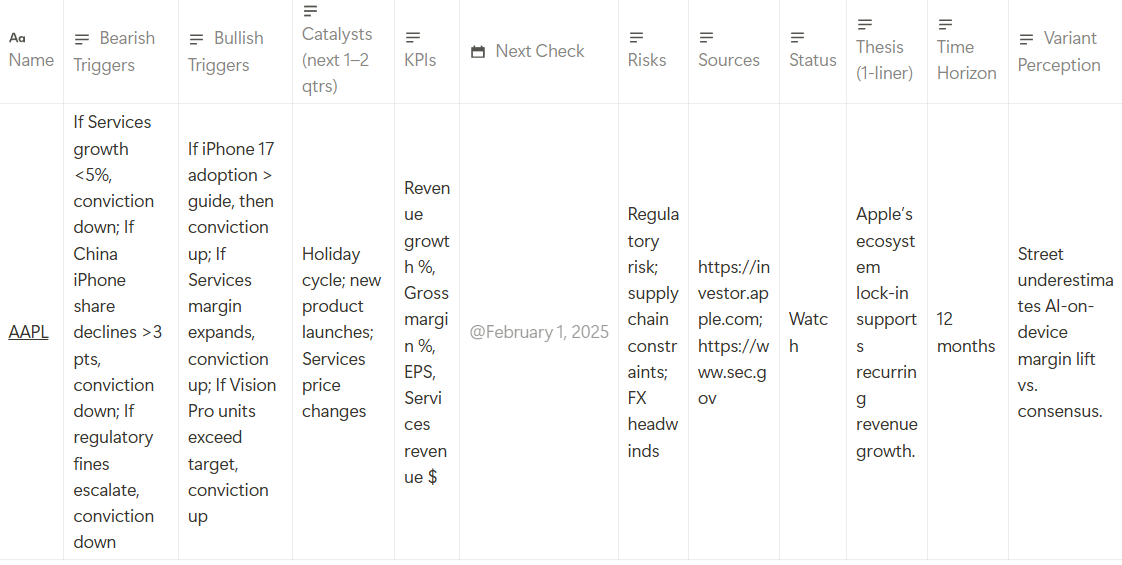

Create a database named “Watchlist Thesis Cards” with properties:

- Ticker (Text)

- Thesis (1-liner) (Text)

- Variant Perception (Text)

- Time Horizon (Select: 3 / 6 / 12 / 18 / 24 months)

- Add under ‘Edit property’

- KPIs (Text)

- Bullish Triggers (Text)

- Bearish Triggers (Text)

- Catalysts (next 1–2 qtrs) (Text)

- Risks (Text)

- Sources (URL)

- Next Check (Date)

- Status (Select: Watch / Research / On-Hold / Exited)

- Add under ‘Edit property’

Paste your ChatGPT output from Step 1 into a new row/page and fill the Ticker, Next Check (usually next earnings date), and Status.

Prefer to skip manual setup?➡️ Download the Notion CSV schema and import it directly into Notion (Import → CSV). It will create the database with all properties for you.

Your Notion database should look like this:

Step 3 — Stay current automatically (Desktop only; 3 minutes)

A) Set a Google Alert (free) for filings & IR updates

- Open google.com/alerts in your browser.

- In the box, type: TICKER ("guidance" OR "8-K" OR "investor relations" OR "earnings")

- Click Show options → How often: At most once a day (or At most once a week).

- Sources: Automatic; Language: English; Region: Any; Deliver to: your email.

- Click Create Alert. (You can add multiple alerts per ticker if you like.)

B) Add a Calendar reminder for “Next Check” (next earnings)

- Open calendar.google.com → click the date of your Next Check.

- If you use another calendar service, add the reminder there.

- Enter the event title: Next Check — [TICKER] Thesis Card.

- Click More options → paste your Notion page URL into the Description.

- Set a Notification for 1 day before + 1 hour before, then Save.

This keeps your thesis alive—news lands in your inbox, and your calendar nudges you to review on schedule.

Step 4 — Refresh and reality-check (2 minutes)

When a new PR/10-Q drops or your alert fires, paste the excerpt into ChatGPT with:

Update my Watchlist Thesis Card for [TICKER]. • Compare disclosed KPIs vs. my KPIs list (mark hit/miss with numbers). • Note which Bullish/Bearish Triggers fired. • Summarize what changed in 3 bullets. • End with: Action Posture = Add / Hold / Trim / Avoid (reflection only; not advice).

Log the changes in the same Notion page. Over time, you’ll see how thesis ↔ results evolve.

The Payoff

In 10 minutes, you’ve upgraded a one-off research brief into a repeatable decision system: a structured thesis, explicit KPIs, clear triggers, scheduled reviews, and a growing log of what actually changed—so you act on process, not impulse.

Transparency & Notes for Readers

- All tools free: ChatGPT Free, Notion Free, Google Alerts, Google Calendar.

- Limits: ChatGPT Free has input length caps—paste concise highlights, not entire filings.

- Data discipline: Use official IR/EDGAR text for numbers.

- Educational workflow — not financial advice.

If you have a workflow idea for me to create or want to share a success from your AI journey, reach out at admin@mindovermoney.ai.

Founder's Corner

Real world learnings as I build, succeed, and fail

I often talk with friends, coworkers, and family about AI, and I’m constantly asked, ‘What should I be paying attention to in order to stay ahead?’. My answer is always the same: start with education and do your best to pay attention to the macro trends. The reality is that the AI news cycle moves like a flash flood, making it increasingly challenging to keep up with. I feel that same pressure, but I have implemented several strategies to keep me sane and sift through the noise. Focusing on macro trends has helped me organize thoughts more efficiently, leading to better information retainment. I’ve also been able to avoid the fringe aspects of the news cycle that play on people's emotions by spreading fear and hysteria about AI. Today, I’ll share three macro trends and provide insights into what I find interesting and why it matters to your AI education journey.

Infrastructure Built Out

There are several trends that fall into this bucket, and I plan on exploring each in more detail in future volumes. It’s incredible to see the capital expenditures going into infrastructure buildouts related to data centers, power grid facilities, and chip manufacturing. Companies, mainly the ‘Magnificent 7’, are leading the charge in AI capex investments, and it’s not easy to follow by solely reading headlines. There are constant conversations around an AI bubble, with many pundits drawing comparisons to the ‘Dot-Com’ bubble that lasted from 1995-2001. I see the arguments on both sides and fully appreciate the cautious approach being taken by economic experts. But my perspective is more bullish; I see the capex wave as a strategy and competition between the largest companies in the world. Whoever can build out the infrastructure to lower costs (e.g., $ per token, compute costs, etc.) will put pressure on smaller companies to mirror pricing strategies that could put them out of business. Are we in an AI bubble? Probably. But there is opportunity in every situation, which is why the AI infrastructure race is a trend all of us should pay attention to.

Here are a few headlines that highlight the massive financial stakes being put down to bring AI to life:

- McKinsey estimates $6.7T in global data-center investment needed by 2030 to meet compute demand, $5.2T of that for AI-class facilities.

- Alphabet spent $23.95B in capex (49% of operating cash flow), with Meta and Microsoft even higher by share; Amazon near ~90%. Signals long-horizon bets on AI capacity.

- Meta’s guidance is eye-popping. 2025 capex outlook lifted to $70–72B (almost double 2024).

- Power is the new bottleneck. IEA projects data-center electricity use doubling to ~945 TWh by 2030 (slightly more than Japan’s total use today); AI is the biggest driver. In the U.S., data centers account for nearly half of electricity-demand growth through 2030.

- U.S. grid pressure. EPRI (via U.S. DOE) estimates data centers could consume up to 9% of U.S. electricity by 2030.

Top takeaway: Follow the money to better understand the winners of the future, impacts to your personal life, and realistic expectations for future AI advancement.

Regulation

This is a hot topic, not just in the U.S., but across the globe. AI introduces new challenges that governments and regulators cannot keep up with. And, like anything else, there are politics driving AI regulation that might not be best for consumers (or humanity as a whole). This area of AI seems to have the most noise and the biggest consequences for how the world evolves as AI becomes more prevalent and powerful. In the U.S. specifically, there are several states pushing for AI regulation, while there has been little consensus on what (if anything) should be done at the federal level. California has introduced several pieces of AI-specific legislation to help put guardrails in place to protect people from the potential negative consequences of AI. Time will tell if these measures are successful, but AI regulation will be an important piece of the puzzle as AI becomes more embedded into everyday life.

Top takeaway: Compliance and law making will impact the speed in which AI labs can execute and influence transparency (for good or bad) across the industry.

Corporate Adoption

Hype or buzzwords? Pilot vs. production? ROI vs. model change? For those of you who work in corporate America, the noise can be deafening. Every week, a new report is released about AI taking jobs, a fresh round of layoffs, or CEOs highlighting automation gains. But the extremes of these headlines seem isolated to big tech, at least for now. I believe this is due to how early we are in the AI adoption lifecycle. Many companies are struggling to find ROI from true generative AI capabilities and are stuck in ‘pilot’ mode. It’s impossible to predict when we will start to see breakthroughs across the Fortune 500, but I believe it will be here within the next 18 months. The technology is improving at a rapid pace, and compute costs continue to decline. We’ll start to hear more and more headlines related to ‘agentic workflow automation’ and ‘AI employees’ that drive ROI and bottom-line impact. It’s imperative that we all pay attention to this trend to learn and figure out how to incorporate generative AI into our professional lives.

Top takeaway: AI adoption does not mean impact to the business. Following ROI and workflow automation helps me sift through the noise and study companies leading AI transformation in the workplace.

It can feel overwhelming to keep up with AI news, but you are ahead of most people by taking time to read Neural Gains Weekly. The only way to get ahead is to put in the effort, even if that is only 10-15 minutes a week. Stay consistent, follow trends, and experiment with AI tools. See you next week!

Goals Tracker:

Follow us on social media and share Neural Gains Weekly with your network to help grow our community of ‘AI doers’. You can also contact me directly at admin@mindovermoney.ai or connect with me on LinkedIn.